Prerequisites

Apache Hadoop Apache Pig is a platform build on the top of Hadoop. You can refer to our previously published article to install a Hadoop single node cluster on Windows 10.

7zip/Winrar 7zip/Winrar is needed to extract .tar.gz archives we will be downloading in this guide.

Downloading Apache Pig

Download the Apache Pig

After the file is downloaded, we should extract it twice using 7zip (using 7zip: the first time we extract the .tar.gz file, the second time we extract the .tar file). We will extract the Pig folder into C:\hadoop-env directory as used in the previous articles. Or you could use winzip to extract it.

Setting Environment Variables

After extracting Derby and Hive archives, we should go to Control Panel > System and Security > System. Then Click on Advanced system settings.

In the advanced system settings dialog, click on Environment variables button.

Now we should add the following user variables:

- PIG_HOME:

C:\hadoop-env\pig-0.17.0

hadoop-envis the folder name of your hadoop.

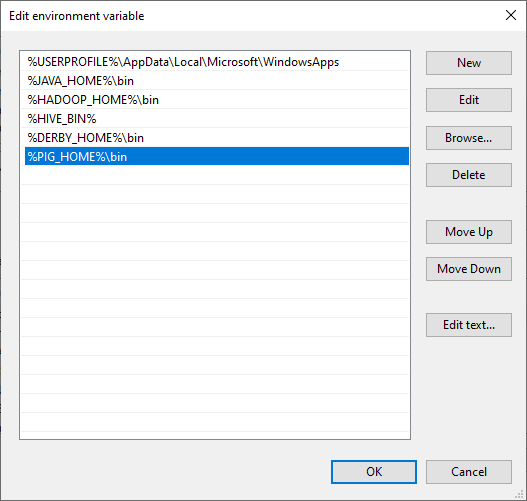

Now, we should edit the Path user variable to add the following paths:

- %PIG_HOME%\bin

Edit pig.cmd file

Edit file D:/Pig/pig-0.17.0/bin/pig.cmd, make below changes and save this file.

- set HADOOP_BIN_PATH=%HADOOP_HOME%\libexec

Validate Pig Installation Pig

Post successful execution of Hadoop and verify the installation.

C:\WINDOWS\system32>pig -version

Apache Pig version 0.17.0 (r1797386)

compiled Jun 02 2017, 15:41:58

If you have encountered any problems, please read through the Troubleshooting section.

Example Script

Start the hadoop

Browse through the sbin directory of Hadoop and start yarn and Hadoop dfs (distributed file system) as shown below.

C:\WINDOWS\system32>cd %HADOOP_HOME%/sbin/

C:\WINDOWS\system32>start-all.cmd

Remember to run as administrator when executing CMD.

Create a Directory in HDFS

In Hadoop DFS, you can create directories using the command mkdir. Create a new directory in HDFS with the name Pig_Data in the required path as shown below.

C:\WINDOWS\system32>cd %HADOOP_HOME%/bin/

C:\WINDOWS\system32>hdfs dfs -mkdir hdfs://localhost:9000/pig_data

Create a text file wikitechy_emp_details.txt delimited by ‘,’ with the content below. Place the file in C: or any directory which you preferred.

111,Anu,Shankar,23,9876543210,Chennai

112,Barvathi,Nambiayar,24,9876543211,Chennai

113,Kajal,Nayak,24,9876543212,Trivendram

114,Preethi,Antony,21,9876543213,Pune

115,Raj,Gopal,21,9876543214,Hyderabad

116,Yashika,Kannan,22,9876543215,Delhi

117,siddu,Narayanan,22,9876543216,Kolkata

118,Timple,Mohanthy,23,9876543217,Bhuwaneshwar

Move the file to HDFS

Now, move the file from the local file system to HDFS using put command as shown below. (You can use copyFromLocal command as well.)

C:\WINDOWS\system32>cd %HADOOP_HOME%/bin/

C:\WINDOWS\system32>hdfs dfs -put C:\wikitechy_emp_details.txt hdfs://localhost:9000/pig_data/

Start the Pig Grunt Shell

The simplest way to write PigLatin statements is using Grunt shell which is an interactive tool where we write a statement and get the desired output. There are two modes to involve Grunt Shell:

- Local: All scripts are executed on a single machine without requiring Hadoop. (command:

pig -x local) - MapReduce: Scripts are executed on a Hadoop cluster (command:

pig -x MapReduce)

Since we have load the wikitechy_emp_details.txt in hdfs, start the Pig Grunt shell in MapReduce mode as shown below.

C:\WINDOWS\system32>pig -x mapreduce

Load the file in a variable ‘student’

Now load the data from the file student_data.txt into Pig by executing the following Pig Latin statement in the Grunt shell.

grunt> student = LOAD 'hdfs://localhost:9000/pig_data/wikitechy_emp_details.txt'

USING PigStorage(',')

as ( id:int, firstname:chararray, lastname:chararray, phone:chararray,

city:chararray );

Check result using DUMP operator (write result to the console)

grunt> Dump student

(111,Anu,Shankar,23,9876543210)

(112,Barvathi,Nambiayar,24,9876543211)

(113,Kajal,Nayak,24,9876543212)

(114,Preethi,Antony,21,9876543213)

(115,Raj,Gopal,21,9876543214)

(116,Yashika,Kannan,22,9876543215)

(117,siddu,Narayanan,22,9876543216)

(118,Timple,Mohanthy,23,9876543217)

If you have encountered any problems, please read through the Troubleshooting section.

Troubleshooting 故障排除

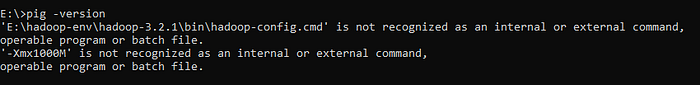

Pig is not recognized as an internal or external command

C:\WINDOWS\system32>pig -version

'E:\hadoop-env\hadoop-3.2.1\bin\hadoop-config.cmd' is not recognized as an internal or external command,

operable program or batch file.

'-Xmx1000M' is not recognized as an internal or external command,

operable program or batch file.

Make sure your is correct

From this:

set HADOOP_BIN_PATH=%HADOOP_HOME%\bin

Change to:

set HADOOP_BIN_PATH=%HADOOP_HOME%\libexec

After Dump command, the process keep looping

Pig when ran in mapreduce mode expects the JobHistoryServer to be available.

This is because the job history server is not running.

Check marped-site.xml has these properties stated or not.

To configure JobHistoryServer, add these properties to mapred-site.xml replacing hostname with actual name of the host where the process is started.

<property>

<name>mapreduce.jobhistory.address</name>

<value>hostname:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hostname:19888</value>

</property>

The result would be like this: